Discover if A/B testing boosts conversion rates. Learn proven strategies, examples, and best practices to optimize your website effectively.

In the digital landscape, conversion rates are the lifeblood of any online business. Whether you’re running an e-commerce store, a SaaS platform, or a content-driven website, the percentage of visitors who take a desired action—be it purchasing a product, signing up for a newsletter, or downloading a resource—directly impacts your bottom line. However, achieving high conversion rates is no small feat. It requires constant experimentation, refinement, and data-driven decision-making. This is where A/B testing, also known as split testing, comes into play.

A/B testing involves comparing two versions of a digital asset—such as a webpage, app screen, or email—to determine which one performs better in achieving a specific goal. By systematically testing variations and analyzing the results, businesses can optimize their user experience, reduce bounce rates, and ultimately drive more conversions. But is A/B testing truly worth the effort? Does it deliver the promised boost in conversion rates, or is it an overhyped strategy better suited for large corporations with massive traffic? This comprehensive guide explores the ins and outs of A/B testing, its benefits, challenges, and proven strategies to help you decide if it’s the right approach for your business.

What Is A/B Testing and Why Does It Matter?

A/B testing is a controlled experiment where two versions of a digital asset (Version A, the control, and Version B, the variation) are shown to different segments of your audience. The goal is to measure which version performs better based on predefined metrics, such as conversion rate, click-through rate (CTR), or time spent on page. For example, you might test two different headlines on a landing page to see which one encourages more sign-ups.

The power of A/B testing lies in its ability to eliminate guesswork. Instead of relying on intuition or assumptions about what your audience prefers, you use real user data to make informed decisions. This data-driven approach can lead to significant improvements in user experience and conversion rates, helping you get more value from your existing traffic.

Consider this scenario: If your website has a 1% conversion rate and attracts 10,000 monthly visitors, only 100 users will convert. If only 1% of those sign-ups upgrade to a $10/month paid plan, you’re left with just $100 in monthly revenue. By increasing your conversion rate to 2%, you could double your revenue without spending more on advertising. A/B testing provides a structured way to achieve such incremental improvements, making it a critical tool for conversion rate optimization (CRO).

The A/B Testing Process: A Step-by-Step Guide

To maximize the effectiveness of A/B testing, it’s essential to follow a structured process. Here’s a detailed breakdown of the steps involved:

1. Identify Goals and Metrics

Before launching an A/B test, clearly define what you want to achieve. Common goals include increasing purchases, sign-ups, downloads, or form submissions. Next, select relevant metrics to measure success, such as:

- Conversion rate: The percentage of visitors who complete the desired action.

- Click-through rate (CTR): The percentage of users who click on a specific link or button.

- Bounce rate: The percentage of visitors who leave the page without taking any action.

- Time on page: The average time users spend on the page.

Set specific, measurable targets for improvement. For example, aim to increase your conversion rate from 1% to 1.5% within a month.

2. Develop Test Hypotheses

A hypothesis is the foundation of any A/B test. It’s an educated prediction about how a specific change will impact user behavior. A strong hypothesis includes:

- Change: The element you plan to modify (e.g., button color, headline text).

- Impact: The expected effect on user behavior (e.g., increased clicks).

- Reasoning: The rationale behind the prediction (e.g., the new color is more attention-grabbing).

Example hypothesis: “Changing the CTA button text from ‘Sign Up’ to ‘Get Started Free’ will increase sign-ups because it emphasizes the free trial and reduces perceived risk.”

Use existing data—such as website analytics, heatmaps, or user feedback—to identify areas for improvement and prioritize tests with the highest potential impact.

3. Create Test Variations

Design the control (Version A) and one or more variations (Version B, C, etc.). Each variation should include a specific, measurable change, such as:

- Different headline text.

- A new button color or size.

- Revised page layout or imagery.

Avoid testing minor changes (e.g., slight font size adjustments) that are unlikely to yield significant results. Ensure non-tested elements remain consistent to isolate the impact of your changes, and prioritize user experience to avoid confusing or frustrating variations.

4. Run the Experiment

Split your traffic randomly between the control and variations to ensure unbiased results. Use A/B testing tools like Optimizely, VWO, or Google Optimize to manage the experiment. Monitor the test closely for technical issues, such as broken links or incorrect tracking, and collect data systematically across all relevant metrics.

5. Analyze Results

Once the test concludes, analyze the data to determine which version performed better. Key considerations include:

- Statistical significance: Ensure the results are not due to random chance. A p-value below 0.05 (95% confidence level) is typically considered significant.

- Holistic review: Don’t focus solely on the primary metric. Examine secondary metrics (e.g., bounce rate, time on page) to understand the broader impact.

- Document learnings: Record what worked, what didn’t, and why to inform future tests.

6. Implement Winning Variations

If a variation outperforms the control, roll out the winning changes across your website or app. However, A/B testing is an iterative process. Continue testing new variations to refine your approach and achieve ongoing improvements.

Is A/B Testing Worth the Effort?

While A/B testing offers clear benefits, it’s not without challenges. Critics argue that the incremental gains are often small, requiring significant traffic to achieve statistical significance. For small businesses or websites with low traffic, this can make A/B testing seem impractical. Additionally, poorly designed tests or errors like repeated significance testing can lead to misleading results.

However, the value of A/B testing lies in its ability to provide actionable insights, even if the immediate impact is modest. For example:

- Small wins add up: A 0.5% increase in conversion rate may seem minor, but for a website with 100,000 monthly visitors, that’s 500 additional conversions.

- Informed decision-making: A/B testing replaces guesswork with data, reducing the risk of costly mistakes.

- Scalable improvements: Insights from one test can be applied across multiple pages or campaigns, amplifying the impact.

For businesses with sufficient traffic (typically 1,000+ monthly conversions per test group), A/B testing is a proven strategy for driving growth. Smaller businesses can still benefit by focusing on high-impact tests or using tools that support low-traffic testing, such as Bayesian A/B testing.

9 Proven A/B Testing Ideas to Boost Conversion Rates

To inspire your A/B testing efforts, here are nine real-world examples of tests that have delivered significant results, along with their benefits and considerations.

1. Headline Testing

What to test: Different headline text, tone, or length on landing pages, blog posts, or product pages.

Example: A SaaS company tested “Boost Your Productivity” vs. “Get More Done in Less Time” and found the latter increased sign-ups by 15%.

Benefits:

- Higher engagement by capturing audience attention.

- Improved SEO through keyword optimization.

- Increased conversions by aligning with user intent.

Hypothesis example: “Changing the headline to emphasize time savings will increase sign-ups because it addresses a key pain point.”

2. Landing Page Layout

What to test: Page structure, image placement, text size, or CTA button positioning.

Example: An e-commerce store rearranged its product page to place the “Add to Cart” button above the fold, resulting in a 10% conversion rate increase.

Benefits:

- Enhanced user experience through intuitive design.

- Lower bounce rates by keeping users engaged.

- Higher conversions by streamlining the user journey.

Hypothesis example: “Moving the CTA button above the fold will increase purchases because it reduces scrolling effort.”

3. Product Pricing

What to test: Price points, discounts, bundle deals, or pricing presentation (e.g., $19.99 vs. $20).

Example: A subscription service tested a 10% discount vs. a free trial and found the free trial increased conversions by 20%.

Benefits:

- Optimized pricing to maximize revenue.

- Increased perceived value through strategic offers.

- Deeper customer insights into price sensitivity.

Hypothesis example: “Offering a free trial will increase sign-ups because it lowers the initial commitment barrier.”

4. Call-to-Action (CTA) Buttons

What to test: Button text, color, size, or placement.

Example: A travel website changed its CTA from “Book Now” to “Reserve Your Spot” and saw a 12% increase in bookings.

Benefits:

- Higher click-through rates by optimizing button design.

- Improved user experience with clear, appealing CTAs.

- Better understanding of user preferences.

Hypothesis example: “Changing the CTA text to ‘Reserve Your Spot’ will increase bookings because it feels less committal.”

5. Customer Testimonials

What to test: Testimonial placement, format (text vs. video), or quantity.

Example: An online course platform added video testimonials to its homepage, boosting conversions by 18%.

Benefits:

- Increased trust by showcasing authentic reviews.

- Higher conversions through persuasive social proof.

- Enhanced user experience with engaging content.

Hypothesis example: “Adding video testimonials will increase sign-ups because they build trust more effectively than text.”

6. Blog Post CTAs

What to test: CTA text, positioning, or design at the end of blog posts.

Example: A B2B company tested a blog CTA from “Learn More” to “Download Our Guide” and saw a 25% increase in downloads.

Benefits:

- Optimized content for higher engagement.

- Increased reader interaction with relevant offers.

- Deeper audience insights for content strategy.

Hypothesis example: “Changing the blog CTA to ‘Download Our Guide’ will increase downloads because it offers a tangible resource.”

7. Promo Codes and Special Offers

What to test: Discount types, delivery methods (e.g., email vs. pop-up), or offer duration.

Example: An online retailer tested a 15% off pop-up vs. an email-exclusive code and found the pop-up increased sales by 8%.

Benefits:

- Higher sales through effective promotions.

- Improved conversion rates with targeted offers.

- Better understanding of customer preferences.

Hypothesis example: “Using a pop-up discount will increase sales because it creates urgency at the point of purchase.”

8. Product Images

What to test: Image angles, backgrounds, or context (e.g., product in use vs. standalone).

Example: A furniture store tested lifestyle images vs. plain product shots and saw a 14% increase in sales.

Benefits:

- Higher click-through rates with appealing visuals.

- Increased sales through better product representation.

- Enhanced user experience with relevant imagery.

Hypothesis example: “Using lifestyle images will increase sales because they help customers visualize the product in use.”

9. Video Content

What to test: Presence of video content vs. no video, or different video styles.

Example: A fitness app added a demo video to its landing page, increasing sign-ups by 22%.

Benefits:

- Improved engagement with dynamic content.

- Higher conversions by showcasing product value.

- Enhanced user experience with informative videos.

Hypothesis example: “Adding a demo video will increase sign-ups because it demonstrates the app’s features clearly.”

Best Practices for Effective A/B Testing

To ensure your A/B tests deliver reliable results, follow these best practices:

- Focus on User Experience: Design variations that make it easy for users to navigate and complete desired actions. Avoid changes that could confuse or frustrate visitors.

- Test One Variable at a Time: Isolate the impact of each change by testing a single element (e.g., button color) rather than multiple changes simultaneously.

- Ensure Sufficient Sample Size: Run tests long enough to collect enough data for statistical significance, typically requiring at least 1,000 conversions per variation.

- Use Reliable Tools: Leverage platforms like Optimizely, VWO, or Google Optimize for accurate traffic splitting and data tracking.

- Document and Iterate: Record all test results and insights to build a knowledge base for future experiments. Treat A/B testing as an ongoing process.

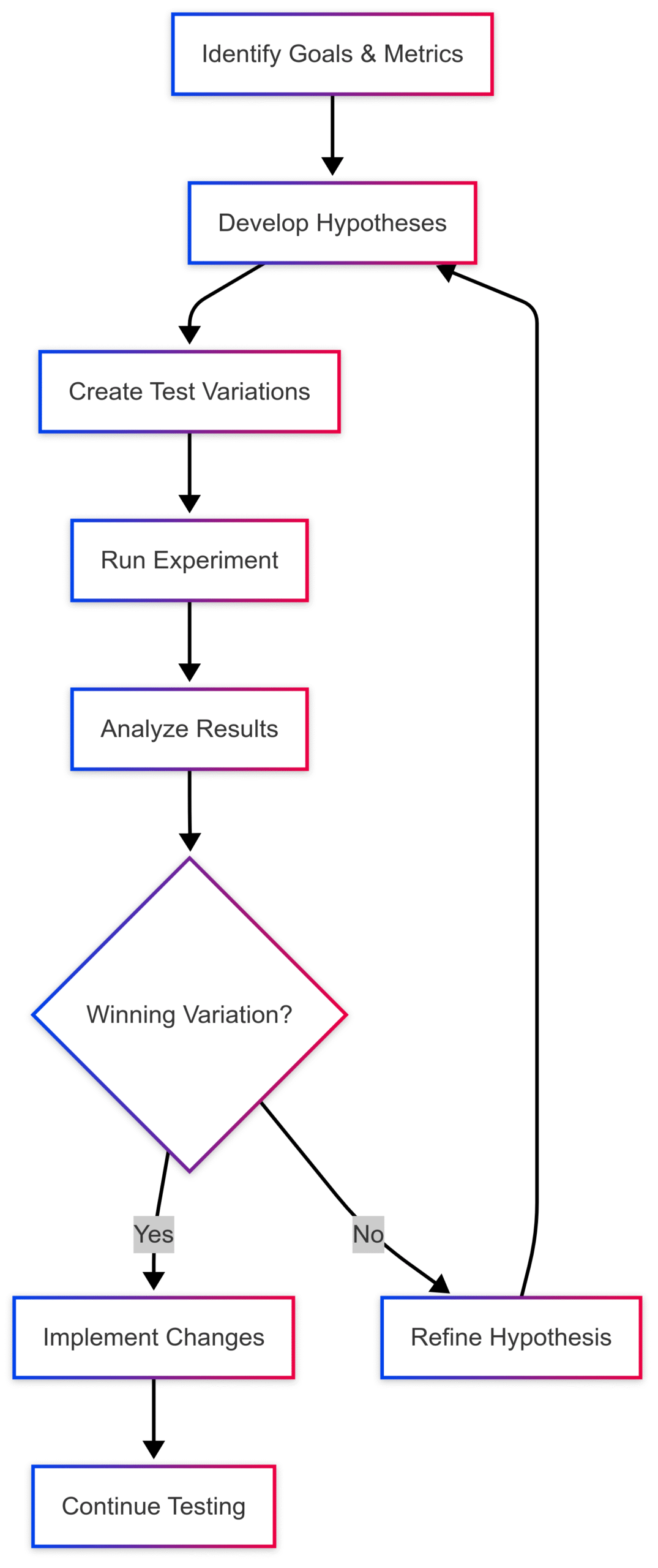

Visualizing the A/B Testing Process

To illustrate the A/B testing workflow, here’s a flowchart:

This chart outlines the iterative nature of A/B testing, emphasizing the cycle of hypothesis development, experimentation, and refinement.

A/B Testing Tools: Specifications and Pricing

Choosing the right A/B testing tool is crucial for efficient experimentation. Below is a comparison of popular tools, including their key features and pricing (based on publicly available information):

| Tool | Key Features | Pricing (Starting) | Best For |

|---|---|---|---|

| Optimizely | Advanced experimentation, personalization, AI-driven insights, robust analytics | Custom pricing (contact for quote) | Large enterprises |

| VWO | Visual editor, heatmaps, session recordings, A/B and multivariate testing | $199/month (Standard plan) | Mid-sized businesses |

| Google Optimize | Free A/B testing, integration with Google Analytics, simple interface | Free (discontinued as of September 2023) | Small businesses (use alternatives) |

| AB Tasty | AI-powered recommendations, funnel analysis, cross-device testing | Custom pricing (contact for quote) | E-commerce and enterprise |

Note: Google Optimize is no longer available, but alternatives like Microsoft Clarity or Hotjar can provide similar functionality for small businesses.

Industry Benchmarks for Conversion Rates

A “good” conversion rate varies by industry and niche. The table below provides average conversion rates for common sectors, based on aggregated data from industry reports:

| Industry | Average Conversion Rate |

|---|---|

| E-commerce (General) | 2.5% |

| Food & Beverage | 4.8% |

| Fashion & Apparel | 2.2% |

| SaaS | 3.0% |

| B2B Services | 2.7% |

Use these benchmarks as a starting point to set realistic goals for your A/B testing efforts.

Addressing Common Criticisms of A/B Testing

Some skeptics argue that A/B testing isn’t worth the effort, citing small effect sizes or the need for large sample sizes. While these concerns are valid, they don’t negate the value of A/B testing when done correctly:

- Small effect sizes: Incremental gains can still translate to significant revenue for high-traffic websites. For low-traffic sites, focus on high-impact tests or use Bayesian methods.

- Sample size requirements: Tools like VWO or Optimizely provide calculators to estimate required sample sizes, helping you plan tests effectively.

- Misleading results: Avoid errors by ensuring proper test design, randomization, and statistical analysis.

Conclusion: Is A/B Testing Right for Your Business?

A/B testing is a powerful tool for boosting conversion rates, but its effectiveness depends on your business’s goals, traffic volume, and commitment to data-driven optimization. For businesses with sufficient traffic, A/B testing offers a low-risk way to improve user experience, increase conversions, and maximize revenue. Even for smaller businesses, strategic testing of high-impact elements can yield valuable insights.

By following a structured process, leveraging reliable tools, and focusing on user-centric changes, you can unlock the full potential of A/B testing. Whether you’re testing headlines, CTAs, or pricing strategies, each experiment brings you closer to understanding your audience and driving sustainable growth.

Ready to start? Identify one element on your website to test, craft a hypothesis, and launch your first A/B experiment. The data will guide you toward better conversions and a stronger online presence.

Please share these A/B Testing For Boosting Conversion Rate? Is It Really Worth It? with your friends and do a comment below about your feedback.

We will meet you on next article.

Until you can read, Rich and Meaty Slow Cooker Chili