How to Exclude Bot Traffic From Google Analytics and GA4

Learn how to exclude bot traffic from Google Analytics and GA4 with step-by-step guides, advanced filtering, and bot management solutions for accurate data.

In the digital age, accurate data is the backbone of informed business decisions. Google Analytics and its successor, Google Analytics 4 (GA4), are powerful tools used by businesses, marketers, and website owners to track and analyze website traffic. However, one persistent issue that can skew your analytics data is bot traffic. Bots, spiders, crawlers, and scrapers are automated programs that interact with your website, often inflating traffic metrics and distorting insights into human user behavior. Excluding bot traffic from Google Analytics and GA4 is critical to ensuring your data reflects real user interactions, enabling better marketing and strategic decisions.

This comprehensive guide will walk you through the process of identifying and excluding bot traffic from Google Analytics and GA4. We’ll cover built-in filtering options, advanced techniques, limitations of Google Analytics’ bot filtering, and why a dedicated bot management solution is essential for protecting your website and ensuring data accuracy. Additionally, we’ll explore how to detect bot traffic, implement filters, and leverage third-party tools like DataDome to block malicious bots entirely.

Understanding Bot Traffic in Google Analytics

What is Bot Traffic?

Bot traffic refers to automated interactions with your website by software applications such as bots, spiders, crawlers, and scrapers. These programs perform tasks like indexing web pages, scraping content, or executing malicious activities. According to industry estimates, bot traffic accounts for nearly half of all global internet traffic, meaning one out of every two hits on your website could be non-human.

Bots can be categorized into two types:

- Good Bots: These include search engine crawlers like Googlebot, which indexes your site for search engine results, or social media bots that fetch content previews. They serve a legitimate purpose and benefit your site’s visibility.

- Bad Bots: These engage in malicious activities such as content theft, credential stuffing, ad fraud, or distributed denial-of-service (DDoS) attacks. Bad bots can degrade site performance, harm user experience, and skew analytics data.

Regardless of their intent, both types of bots can distort Google Analytics reports by registering as hits, which are counted as visits or sessions. This leads to inaccurate metrics, such as inflated page views, misleading bounce rates, or skewed geographic data, which can misinform business decisions.

Why Bot Traffic Matters

Accurate analytics data is crucial for understanding user behavior, optimizing marketing campaigns, and improving website performance. Bot traffic can:

- Inflate Metrics: Bots can artificially increase page views, sessions, and other metrics, making your site appear more popular than it is.

- Skew User Behavior: Bots may produce unnatural bounce rates (e.g., 0% or 100%) or single-page sessions, misrepresenting how real users interact with your site.

- Impact Decision-Making: Inaccurate data can lead to misguided marketing strategies, wasted budgets, or incorrect assumptions about audience behavior.

- Affect Site Performance: Beyond analytics, bad bots can slow down your website, consume server resources, and disrupt user experience, potentially leading to lost conversions.

Excluding bot traffic from Google Analytics and GA4 ensures that your reports reflect genuine human interactions, providing a clearer picture of your website’s performance and user engagement.

Identifying Bot Traffic in Google Analytics

Before you can exclude bot traffic, you need to identify it. Bot traffic often manifests as anomalies in your analytics data. Here are key indicators to look for:

- Unexplained Traffic Spikes: Sudden increases in traffic without a clear cause, such as a marketing campaign or product launch, often point to bot activity.

- Unusual Bounce Rates: A bounce rate of 0% (indicating no interaction) or 100% (indicating a single page view) is unnatural for human users and suggests bot behavior.

- Single-Page Sessions: Sessions that consistently involve only one page view are a red flag for bot activity.

- Strange Geographic Locations: Traffic from unexpected or irrelevant countries may indicate bots, especially if your site targets a specific region.

- “Not Set” Browser Dimensions: If browser or device data is listed as “not set,” it could indicate a bot that doesn’t mimic standard user agents.

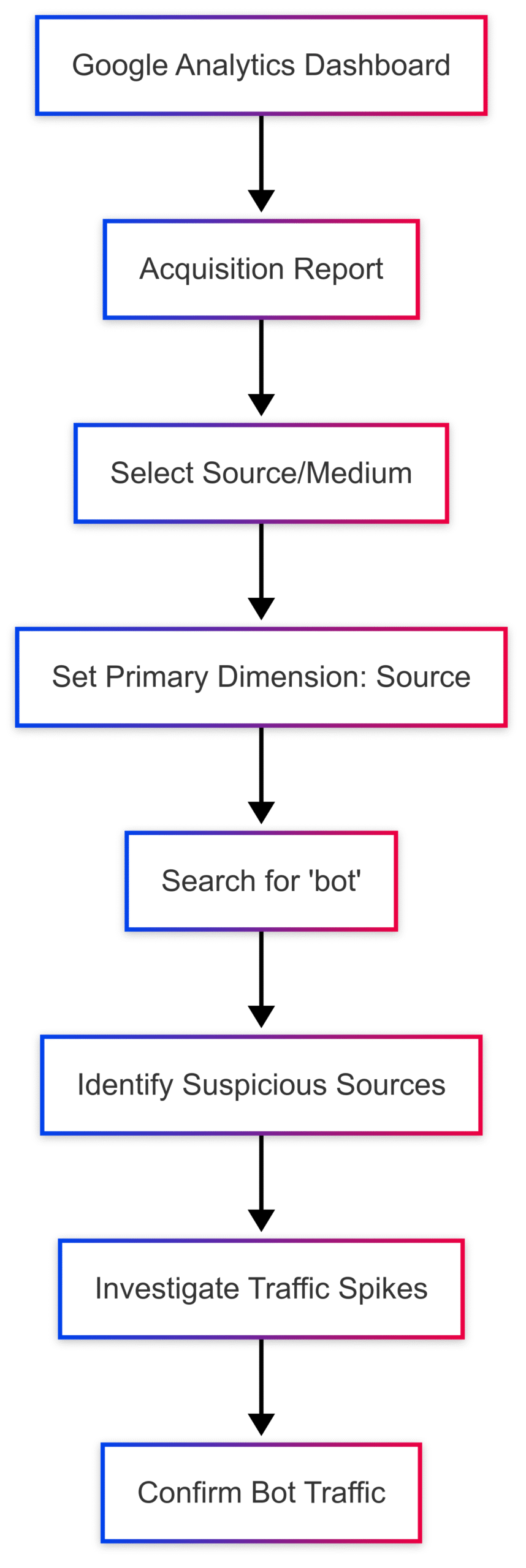

- Suspicious Source Names: Some bots are poorly disguised and include “bot” in their source name (e.g., bot-traffic.xyz). You can check this in the Acquisition > All Traffic > Source/Medium report by setting the primary dimension to Source and searching for “bot.”

To visualize bot traffic patterns, you can use Google Analytics’ reporting tools to create custom reports or dashboards. For example, a line chart comparing traffic spikes to marketing events can help pinpoint bot-driven anomalies.

How to Exclude Bot Traffic in Google Analytics and GA4

Google Analytics and GA4 offer built-in tools and advanced techniques to filter out bot traffic. Below, we outline the primary methods to exclude bots, starting with the simplest approach.

Method 1: Enable Built-In Bot Filtering

The easiest and most effective way to exclude known bot traffic is to use Google Analytics’ built-in bot filtering option. This feature filters out bots and spiders listed on the Interactive Advertising Bureau’s (IAB) International Spiders & Bots List.

Step-by-Step Guide for Google Analytics (Universal Analytics):

- Log in to Google Analytics: Access your account and select the property and view you want to modify.

- Navigate to Admin: Click the Admin icon in the left-hand navigation menu.

- Select View Settings: In the View column, click View Settings.

- Enable Bot Filtering: Scroll to the Bot Filtering section and check the box labeled Exclude all hits from known bots and spiders.

- Save Changes: Click Save to apply the filter.

Step-by-Step Guide for GA4:

- Log in to GA4: Access your GA4 property.

- Navigate to Admin: Click the Admin icon in the navigation menu.

- Select Data Stream Settings: Under the Property column, click Data Streams, then select the relevant data stream.

- Enable Bot Filtering: Locate the Bot Filtering option and check Exclude all hits from known bots and spiders.

- Save Changes: Click Save to confirm.

Note: This filter only applies to new data collected after enabling the setting. Historical data remains unaffected.

Pros and Cons of Built-In Bot Filtering

| Pros | Cons |

|---|---|

| Easy to implement (single checkbox) | Only filters bots on the IAB list |

| Automatically updates with IAB list | Does not affect historical data |

| No technical expertise required | Misses sophisticated or unknown bots |

Method 2: Create Custom Filters

For more control, you can create custom filters to exclude specific bot traffic based on variables like IP addresses, hostnames, or source names. This method is useful for targeting bots not covered by the IAB list.

Steps to Create a Custom Filter:

- Create a New View: In the Admin section, under the View column, click Create View to set up a new view for testing filters. This preserves your original data.

- Disable Bot Filtering: In the new view, uncheck the Exclude all hits from known bots and spiders box to avoid overlap.

- Create a Filter: Navigate to Filters in the View column, click Add Filter, and select Create new Filter.

- Define Filter Criteria: Choose a filter type (e.g., Exclude) and specify criteria, such as:

- IP Address: Exclude traffic from known bot IP ranges (e.g., data center IPs).

- Hostname: Exclude invalid hostnames (e.g., anything not matching your domain).

- Source Name: Exclude sources containing “bot” or known malicious domains (e.g., bot-traffic.xyz).

- Test the Filter: Apply the filter to the test view and monitor results for a few days to ensure it works as expected.

- Apply to Primary View: Once verified, apply the filter to your primary view.

Example Filter Configuration:

| Field | Value |

|---|---|

| Filter Name | Exclude Bot IPs |

| Filter Type | Exclude |

| Filter Field | IP Address |

| Filter Pattern | ^123.456.789.[0-255]$ |

Challenges with Custom Filters

- Complexity: Requires identifying bot-specific patterns, which can be time-consuming.

- Evolving Bots: Sophisticated bots use residential IPs or mimic human behavior, making them harder to filter.

- Risk of Over-Filtering: Incorrect filters may exclude legitimate traffic, skewing data further.

Method 3: Use the Referral Exclusion List

The Referral Exclusion List allows you to exclude specific domains from being counted as referral sources. This can help mitigate bot traffic from known malicious domains.

Steps to Configure the Referral Exclusion List:

- Navigate to Admin: In Google Analytics, go to the Admin section.

- Select Tracking Info: Under the Property column, click Tracking Info > Referral Exclusion List.

- Add Domains: Enter domains associated with bot traffic (e.g., bottraffic.live, trafficbot.live).

- Save Changes: Click Save to update the list.

Limitations of Referral Exclusion

Excluding a domain doesn’t block its traffic; it only strips the referral information, causing hits to appear as direct traffic. This can still skew your data and is less effective than other methods. For example, traffic from bot-traffic.icu may still be recorded as direct visits, complicating analysis.

Comparing Bot Exclusion Methods

| Method | Ease of Use | Effectiveness | Limitations |

|---|---|---|---|

| Built-In Bot Filtering | High | Moderate | Limited to IAB-listed bots |

| Custom Filters | Low | High (if accurate) | Time-consuming, requires expertise |

| Referral Exclusion | Moderate | Low | Doesn’t block traffic, only strips referral data |

Limitations of Google Analytics Bot Filtering

While Google Analytics provides tools to filter bot traffic, these methods have significant limitations:

- Incomplete Bot Coverage: The built-in bot filtering relies on the IAB’s list, which doesn’t include all bots, especially newer or sophisticated ones.

- Historical Data: Filters only apply to new data, leaving historical reports unchanged.

- Manual Effort: Custom filters require ongoing maintenance to keep up with evolving bot tactics, such as IP spoofing or user agent manipulation.

- Direct Traffic Misclassification: Referral exclusions can cause bot traffic to appear as direct traffic, further muddying your data.

- No Real-Time Protection: Filtering bots in Google Analytics doesn’t prevent them from hitting your site, consuming resources, or affecting user experience.

These limitations highlight the need for a proactive approach to bot management beyond analytics filtering.

Why Bot Management is Essential

Excluding bots from Google Analytics improves data accuracy, but it doesn’t address the root issue: bots interacting with your website, app, or APIs. Bad bots can cause significant harm, including:

- Performance Degradation: Bots consume server resources, slowing down your site and affecting user experience.

- Security Risks: Malicious bots engage in activities like credential stuffing, account takeover (ATO), and DDoS attacks.

- Revenue Loss: Bots can scrape content, commit ad fraud, or disrupt conversions, impacting your bottom line.

To effectively manage bot traffic, you need a dedicated bot management solution that detects and blocks bots in real time before they reach your analytics or harm your platform.

Introducing DataDome: A Comprehensive Bot Management Solution

DataDome is a leading bot management platform that provides visibility into bot traffic and enables you to block malicious bots across your website, mobile app, and APIs. Unlike Google Analytics’ filtering, DataDome actively prevents bots from interacting with your platform, protecting both your data and your infrastructure.

Key Features of DataDome

- Real-Time Detection: Uses AI and machine learning to identify and block bots in milliseconds.

- User-Friendly Dashboard: Provides detailed insights into bot traffic, including sources, behaviors, and attack patterns.

- Customizable Rules: Allows you to allow or block specific bots (e.g., allow Googlebot, block malicious scrapers).

- Quick Setup: Can be implemented in minutes with a 30-day free trial.

- Comprehensive Protection: Defends against DDoS attacks, account takeover, ad fraud, and content theft.

How to Get Started with DataDome

- Sign Up: Visit the DataDome website and start a free 30-day trial.

- Integrate: Add DataDome to your website, app, or APIs using provided integrations (e.g., JavaScript snippet, server-side module).

- Analyze Traffic: Use the dashboard to monitor bot activity and identify threats.

- Block Malicious Bots: Configure rules to block bad bots while allowing legitimate ones like search engine crawlers.

By combining DataDome with Google Analytics’ bot filtering, you can achieve cleaner data and stronger protection against bot-related threats.

Pricing (Approximate, Subject to Change)

| Plan | Features | Price |

|---|---|---|

| Starter | Basic bot detection, limited API calls | $500/month |

| Pro | Advanced detection, customizable rules | $1,500/month |

| Enterprise | Full protection, dedicated support | Custom pricing |

Note: For current pricing, visit https://datadome.co/pricing/.

Best Practices for Managing Bot Traffic

To maximize the accuracy of your Google Analytics data and protect your website, follow these best practices:

- Enable Built-In Bot Filtering: Always activate the “Exclude all hits from known bots and spiders” option in Google Analytics and GA4.

- Monitor Traffic Regularly: Use Google Analytics reports to identify anomalies and investigate potential bot activity.

- Implement Custom Filters Judiciously: Create targeted filters for specific bot patterns, but test them in a separate view to avoid excluding legitimate traffic.

- Use a Dedicated Bot Management Solution: Tools like DataDome provide real-time protection and detailed insights, addressing limitations of Google Analytics’ filtering.

- Keep Historical Data in Mind: Since filters don’t affect past data, maintain a clean view for ongoing analysis and use historical data for trend comparisons cautiously.

- Educate Your Team: Ensure your marketing and IT teams understand the impact of bot traffic and how to monitor and mitigate it.

Conclusion

Excluding bot traffic from Google Analytics and GA4 is essential for maintaining accurate data and making informed business decisions. By enabling built-in bot filtering, creating custom filters, and leveraging tools like DataDome, you can significantly reduce the impact of bots on your analytics and protect your website from malicious activity. While Google Analytics’ tools are a good starting point, they have limitations that necessitate a comprehensive bot management strategy. By combining analytics filtering with proactive bot detection and blocking, you can ensure cleaner data, better performance, and a safer digital environment for your business and users.

Start by enabling bot filtering in Google Analytics today, and explore advanced solutions like DataDome to take your bot management to the next level. Cleaner data means better decisions—don’t let bots stand in the way of your success.

Please share these How to Exclude Bot Traffic From Google Analytics and GA4 with your friends and do a comment below about your feedback.

We will meet you on next article.

Until you can read, How to Create a Table of Contents in WordPress